Prioritized Experience Replay

Prioritized Experience Replay

Introduction

In simplest form, RL agents observe a stream of experience and discard incoming data immediately, after a single update. Two issues with this are:

- Strongly correlated updates that break the i.i.d assumptions of most stochastic-gradient-based algorithms

- The rapid forgetting of possibly rare experiences that would be useful later on (ie. some rare actions, rare states etc)

Experience replay addresses both of these issues. In general, it can reduce the amount of experience required to learn, and replace it with more computation and more memory.

However, RL gant can learn more effectively from some transitions than from others. Transitions may be more or less surprising, redundant, or task-relevant. Some transitions may not be immediately useful to the agent but might become so when the agent competence increases. Prioritized replay memory liberates agents from considering transitions uniformly. In particular, the authers propose to more frequently reply transitions with high expected learning progress, as measured by the magnitude of their TD error (on expectation, it is the BR). The bias will be corrected with importance sampling, loss of diversity will be alleviated with stochastic optimization.

Using a replay memory leads to design choices at two levels: which experiences to store, and which experiences to replay (and how to do so). This paper addresses only the latter: making the most effective use of the replay memory for learning.

Prioritized Replay

Prioritizing with TD Error

The central component of prioritized replay is the criterion by which the importance of each transition is measured. One idealised criterion would be the amount the RL agent can learn from a transition in its current state (expected learning progress). While this measure is not directly accessible, a reasonable proxy is the magnitude of a transition's TD error \(\delta\) (remember the \(E[\delta] = BR\), so \(\delta\) is an unbiased estimate of BR). Which indicates how 'surprising' or unexpected the transition is, in order words, how far the value is from its next-step bootstrap estimate (i.e \(\hat{T} V\)). However, TD-error can have high variance in some circumstances. Some possible alternatives for TD error:

Greedy TD Error Prioritization

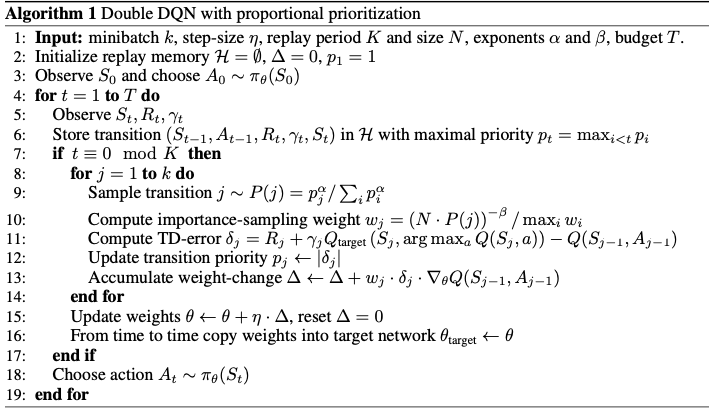

This algorithm stores the last encountered TD error along with each transition in the replay memory. The transition with the largest absolute TD error is replayed from the meomory. A Q-learning update is applied to this transition, which updates the weights in proportion to the TD error. New trains arrive without a known TD-error, so they put them at maximal priority in order to guarantee that all experience is seen at least once.

Some issues:

- TD errors are only updated for the transitions that are replayed or sampled from the memory. (to avoid expensive sweeps over the entire replay memory)

- The transitions that have a low TD error on first visit may not be replayed for a long time or never because they will have low priority.

- It is sensitive to noise spikes, which can be exacerbated by bootstrapping (a large update to \(Q(s, a)\) may result in large updates in some other preceding states). When approximate error (\(\|Q^{\pi} - Q\|\)) appear as another source of noise.

- Errors shrink slowly because the initally high error transitions get replayed frequently. This lack of diversity that makes the system prone to over-fitting to specific training transitions.

Stochastic Prioritization

To overcome these drawbacks, a stochastic sampling method that interpolates between pure greedy prioritization and uniform random sampling is introduced by the authors. like epsilon greedy policy, this method ensures that the probability of being sampled is monotonic in a transition's priority, while guaranteeing a non-zero probability even for the lowest-priority transition. The probability of sampling transition \(i\) is defined as:

\[P(i) = \frac{p^{\alpha}_i}{\sum_{k} p^{\alpha}_k}\]

where \(p_i > 0\) is the priority of transition \(i\). The exponent \(\alpha\) determines how much prioritization is used, which \(\alpha = 0\) corresponding to the uniform case.

There are several choice of priority measure \(p_i\):

- absolute TD error: \(p_i = | \delta_i | + \epsilon\), where \(\epsilon\) is a small positive constant that prevents the edge-case of transitions not being revisited once their error is 0.

- rank based: \(p_i = \frac{1}{rank(i)}\), where \(rank(i)\) is the rank of transition \(i\) when the replay memory is sorted according to \(|\delta_i|\). In this case, \(P\) becomes a power law distribution with exponent \(\alpha\).

Both distributions are monotonic in \(|\delta_i|\), but the latter is likely to be more robust because it is not sensitive to outliers.

Implementation

To efficiently sample from the distribution, the complexity cannot depend on \(N\). For a rank-based variant, we can approximate the cumulative density function with a piecewise linear function with \(k\) segments of equal probability. The segment boundaries can be precomputed. At runtime, we can sample a segment and then sample uniformly among the transitions within it. This works particularly well with mini-batch based learning algorithm. Choose \(k\) to be the size of the mini-batch the sample one transition from each segment (stratified sampling). The proportional variant is different, it is based on sum-tree data structure which can be efficiently updated and sampled from.

Importance Sampling to Correct Bias

The estimation of the expected value with stochastic updates relies on those updates corresponding to the same distribution as its expectation (I think they are talking about the DQN loss, \(E[\| \hat{T}Q_{k} - Q\|]\), where transitions are sampled from the replay memory, since the priority of transitions changes, the underlying distribution changes, in order to fix this, we need to remove some effect of the priority on the shift of distribution). Prioritized replay introduces bias because it changes this distribution in an uncontrolled fashion, and therefore changes the solution that the estimates will converge to (even if the policy and state distribution are fixed). We can correct this bias by using importance-sampling (IS) weights:

\[w_i = \frac{1}{N} \frac{1}{P(i)}\]

Thus, if we have large \(P(i)\), we should make it smaller, so the distribution is uniform.

These weights can be folded into the Q-learning update by using \(w_i \delta_i\) instead of \(\delta\), for stability, they also normalize weights by \(\frac{1}{max_i w_i}\)

Ref

https://danieltakeshi.github.io/2019/07/14/per/#:~:text=Prioritized%20replay%20introduces%20bias%20because,%2Dsampling%20(IS)%20weights.