Policy Gradient (3)

Policy Search Methods (REINFORCE)

We already now that, if we have sampled from the correct distribution and have an estimate for \(Q^{\pi_{\theta}} (X, A)\), we have an unbiased sample of the policy gradient (Discounted Setting):

\[\gamma^{k} \nabla \log \pi_{\theta}(A | X) Q^{\pi_{\theta}} (X, A)\]

The remaining issue is the computation of \(Q^{\pi_{\theta}} (X, A)\). This is essentially a Policy Evaluation problem, and we may use various action-value function estimators.

REINFORCE

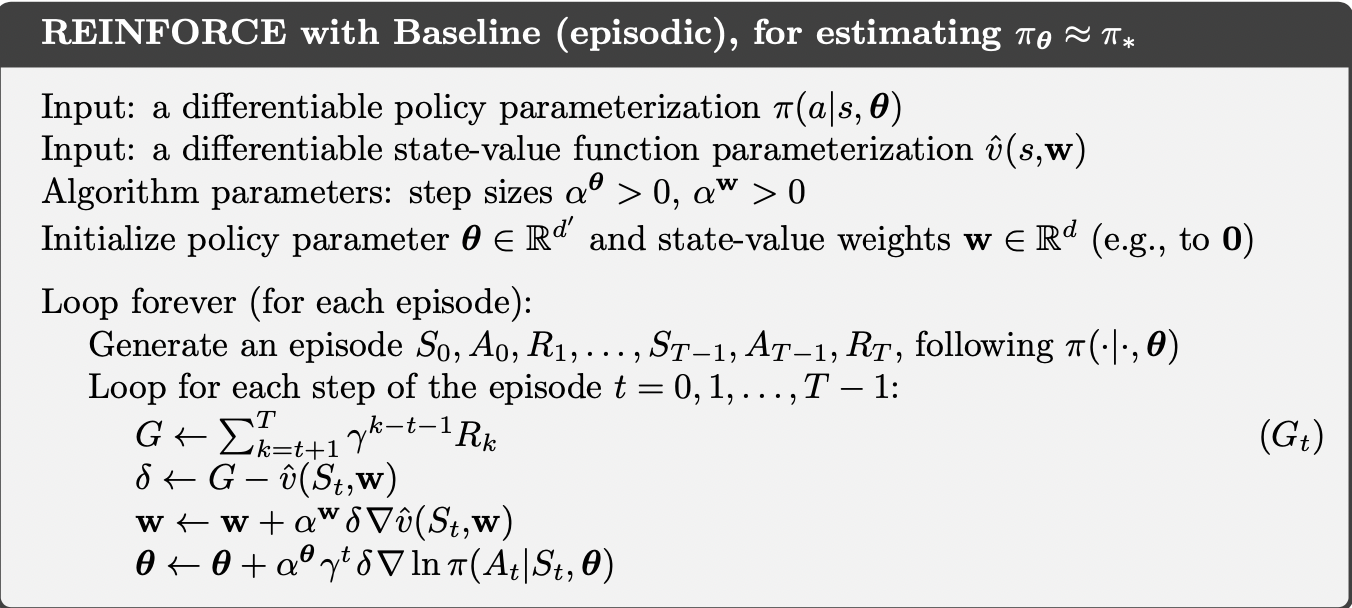

A simple approach uses MC estimates to estimate \(Q^{\pi_{\theta}} (X, A)\). This would lead to what is known as the REINFORCE algorithm:

In the on-policy setting when agent follows \(\pi_{\theta}\):

- It generates the sequence \(X_1, A_1, R_1\) with \(A_{t} \sim \pi_{\theta} (\cdot | X_t), X_t \sim \rho^{\pi_{\theta}}\).

- Then \(G_{t}^{\pi_{\theta}} = \sum_{k \geq t} \gamma^{k - t} R_{k}\) is an unbiased estimator of \(Q^{\pi_{\theta}} (X_t, A_t)\).

- We replace the action-value function with the estimate.

- The return however has high variance even though it is unbiased.

- We can use a state dependent baseline function (usually the value function) to reduce the variance.

Implementation

Related Posts