Value Function Approximation (2)

Value Function Approximation (Algorithms)

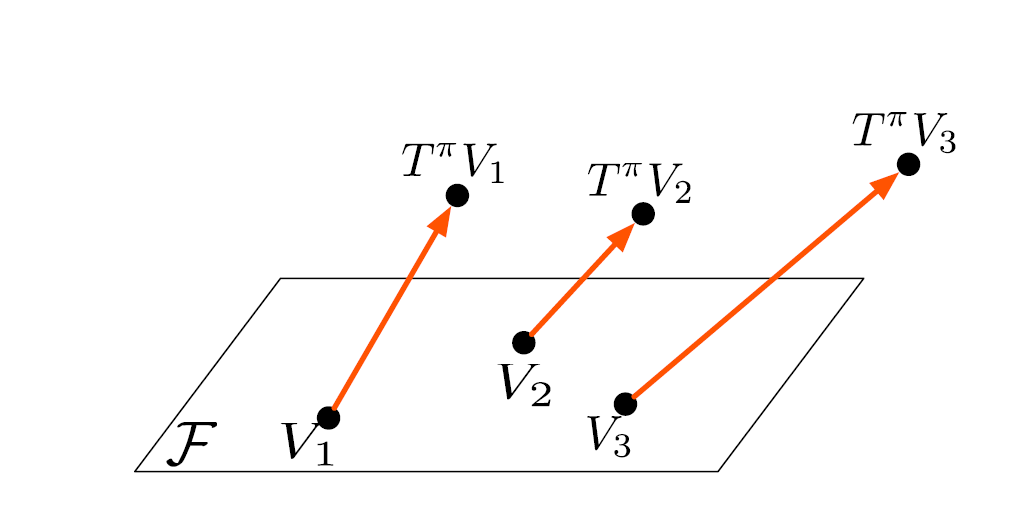

Approximate Value Iteration

Population Version

Recall that the procedure for VI is:

\[V_{k+1} \leftarrow T^{\pi}V_{k}\] \[V_{k+1} \leftarrow T^{*}V_{k}\]

One way to develop its approximation version is to perform each step only approximately (i.e find \(V_{k+1} \in \mathbf{F}\)) such that:

\[V_{k+1} \approx TV_{k}\]

Where \(T\) can be \(T^*\) or \(T^\pi\).

We start from a \(V_0 \in \mathbf{F}\), and then at each iteration \(k\) of AVI, we solve (i.e \(p\) is often 2):

\[V_{k+1} \leftarrow \underset{V \in \mathbf{F}}{\arg\min} \|V - TV_{k}\|^p_{p, \mu}\]

At each iteration, \(TV_k\) (our target) may not be within \(\mathbf{F}\) anymore even though \(V_{k} \in \mathbf{F}\), we may have some approximation error at each iteration of AVI. The amount of error depends on how expressive \(\mathbf{F}\) is and how much \(T\) can push a function within \(\mathbf{F}\) outside that space.

Batch Version

The objective of AVI cannot be computed because:

- \(\mu\) is unknown

- Environment \(R, P\) is often not available, thus \(TQ_k\) cannot be computed.

If we have samples in the form of \((X, A, R, X^{\prime})\), we can compute the unbiased sample of \(TQ_k\):

\[\hat{T}^{\pi}Q_k = R + \gamma Q(X^{\prime}, A^{\prime})\] \[\hat{T}^{*} Q_k = R + \gamma \max_{a^{\prime} \in A} Q(X^{\prime}, a^{\prime}) \]

Where \(A^{\prime} \sim \pi(\cdot | X^{\prime})\)

The question is: can we replace \(TQ_k\) with \(\hat{T}Q_k\)?

Given any \(Z = (X, A)\) \[\begin{aligned} E_{\hat{T}Q_k}[|Q(Z) - \hat{T}Q_k (Z)|^2 | Z] &= E_{\hat{T}Q_k}[|Q(Z) - TQ_k (Z) + TQ_k (Z) - \hat{T}Q_k (Z)|^2 | Z]\\ &= E_{\hat{T}Q_k}[|Q(Z) - TQ_k (Z)|^2 | Z] + E_{\hat{T}Q_k}[|TQ_k (Z) - \hat{T}Q_k(Z)|^2 | Z] + 2 E_{\hat{T}Q_k}[(Q(Z) - TQ_k (Z))(TQ_k (Z) - \hat{T}Q_k (Z)) | Z]\\ &= E_{\hat{T}Q_k}[|Q(Z) - TQ_k (Z)|^2 | Z] + E_{\hat{T}Q_k}[|TQ_k (Z) - \hat{T}Q_k(Z)|^2 | Z] \end{aligned}\]Since \(Z \sim \mu\):

\[\begin{aligned} E_{\mu} [E_{\hat{T}Q_k}[|Q(Z) - TQ_k (Z)|^2 | Z] + E_{\hat{T}Q_k}[|TQ_k (Z) - \hat{T}Q_k(Z)|^2 | Z]] &= E_{\mu, \hat{T}Q_k} [|Q(Z) - TQ_k (Z)|^2] + E_{\mu, \hat{T}Q_k} [|TQ_k (Z) - \hat{T}Q_k(Z)|^2]\\ &= \|Q(Z) - TQ_k (Z)\|^2_{2, \mu} + E_{\mu} [Var[\hat{T}Q_k (Z) | Z]] \end{aligned}\]Since the expectation of variance term does not depend on \(Q\), the solution to the surrogate objective is the same as the original objective:

\[\underset{Q \in \mathbf{F}}{\arg\min} \; E_{\mu, \hat{T}Q_k}[|Q(Z) - \hat{T}Q_k (Z)|^2] = \underset{Q \in \mathbf{F}}{\arg\min} \; \|Q(Z) - TQ_k (Z)\|^2_{2, \mu}\]

Similar to ERM, we do not know the environment dynamics \(R, P\) and distribution \(\mu\). We can use samples and estimate the expectation:

\[\frac{1}{N} \sum^{N}_{i=1} |Q(X_i, A_i) - \hat{T}Q_k (X_i, A_i)|^2 = \|Q - \hat{T}Q_k\|^2_{2, D_n}\]

This is the basis of DQN.

Bellman Residual Minimization

Population Version

Recall that:

\[V = T^{\pi}V \implies V = V^{\pi}\]

Under FA, we may not achieve this exact equality, instead:

\[V \approx V^{\pi}\]

Thus, we can formulate our objective:

\[V = \underset{V \in \mathbf{F}}{\arg\min} \|V - T^{\pi}V\|^p_{p, \mu} = \|BR(V)\|^p_{p, \mu}\]

By minimizing this objective (\(p\) is usually 2), we have Bellman Residual Minimization.

This procedure is different from AVI in that we do not mimic the iterative process of VI (which is convergent in the exact case without any FA), but instead directly go for the solution of fixed-point equation (\(V - T^{\pi}V\) instead of \(V - T^{\pi}V_k\)).

If there exists a \(V \in \mathbf{F}\) that makes \(\|V - T^{\pi}V\|^2_{2, \mu} = 0\) and if we assume \(\mu(x) > 0, \; \forall x \in \chi\), we can conclude that \(V(x) = V^{\pi}(x), \; \forall x \in \chi\) so \(V = V^{\pi}\).

Batch Version

Similar to AVI, we may want to replace \(TV\) or \(TQ\) by \(\hat{T}Q\). Thus, our empirical objective is:

\[Q = \underset{Q \in \mathbf{F}}{\arg\min} \frac{1}{N} \sum^N_{i=1} |Q(X_i, A_i) - \hat{T}^{\pi} Q (X_i, A_i)|^2 = \|Q - \hat{T}^{\pi}Q\|^2_{2, D_n}\]

Using \(D_n = \{(X_i, A_i, R_i, X^{\prime}_i)\}^N_{i=1}\)

We can see that \(Q\) appears in both \(\hat{T}^{\pi}Q\) and \(Q\), which is different from AVI and ERM. This causes an issue: the minimizer of \(\|Q - T^{\pi}Q\|^2_{2, \mu}\) and \(\|Q - \hat{T}^{\pi}Q\|^2_{2, \mu}\) are not necessarily the same for stochastic dynamics.

To see this, for any \(Q\) and \(Z = (X, A)\) we compute:

\[E_{\hat{T}^{\pi}Q} [|Q(Z) - \hat{T}Q(Z)|^2 | Z]\]

Then:

\[\begin{aligned} E_{\hat{T}^{\pi}Q}[|Q(Z) - \hat{T}^{\pi}Q (Z)|^2 | Z] &= E_{\hat{T}^{\pi}Q}[|Q(Z) - T^{\pi}Q (Z) + T^{\pi}Q (Z) - \hat{T}^{\pi}Q (Z)|^2 | Z]\\ &= E_{\hat{T}^{\pi}Q}[|Q(Z) - T^{\pi}Q (Z)|^2 | Z] + E_{\hat{T}^{\pi}Q}[|T^{\pi}Q (Z) - \hat{T}^{\pi}Q(Z)|^2 | Z] + 2 E_{\hat{T}^{\pi}Q}[(Q(Z) - T^{\pi}Q (Z))(T^{\pi}Q (Z) - \hat{T}^{\pi}Q (Z)) | Z]\\ &= E_{\hat{T}^{\pi}Q}[|Q(Z) - T^{\pi}Q (Z)|^2 | Z] + E_{\hat{T}^{\pi}Q}[|T^{\pi}Q (Z) - \hat{T}^{\pi}Q(Z)|^2 | Z] \end{aligned}\]Since \(Z \sim \mu\), the first term is:

\[E_{\hat{T}^{\pi}Q, \mu}[|Q(Z) - T^{\pi}Q (Z)|^2] = \|Q - T^{\pi}Q\|^2_{2, \mu}\]

The second term is:

\[E_{\mu}[E_{\hat{T}^{\pi}Q}[|T^{\pi}Q (Z) - \hat{T}^{\pi}Q(Z)|^2 | Z]] = E_{\mu}[Var[\hat{T}^{\pi}Q(Z) | Z]]\]

We can see that the variance term \(Var[\hat{T}^{\pi}Q(Z)\) depends on \(Q\), as we minimize the objective w.r.t \(Q\) in stochastic dynamical systems (for deterministic ones, it is zero), we have $ E_{}[Var[^{}Q(Z) | Z]] $, so the minimizer of the batch version objective is not the same as population version for BRM in stochastic dynamics.

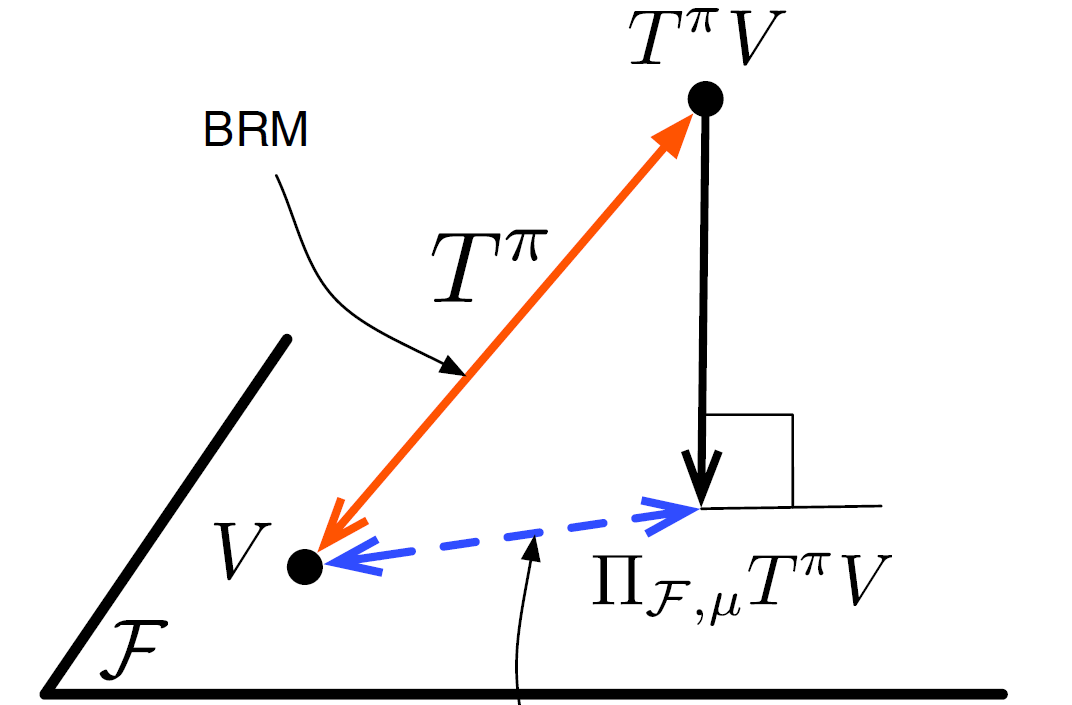

Projected Bellman Error

From BRM, we know that even though \(V \in \mathbf{F}\), \(T^{\pi} V\) may not be in \(\mathbf{F}\). Thus, a good approximator \(V \in \mathbf{F}\) should have distance to \(T^{\pi}V\) small. Thus, we want to find \(V \in \mathbf{F}\) such that \(V\) is the projection of \(T^{\pi}V\) onto the space \(\mathbf{F}\).

We want to find a \(V \in \mathbf{F}\) such that:

\[V = \prod_{\mathbf{F}, \mu} T^{\pi}V\]

Where \(\prod_{\mathbf{F}, \mu}\) is the projection operator.

TRhe projectio operator \(\prod_{\mathbf{F}, \mu}\) is a linear operator that takes \(V \in B(\chi)\) and maps it to closest point on \(F\) measured according to its \(L_{2} (\mu)\) norm:

\[\prod_{\mathbf{F}, \mu} V \triangleq \underset{V^{\prime} \in \mathbf{F}}{\arg\min} \|V^{\prime} - V\|^2_{2, \mu}\]

It has some properties:

- The projection belongs to function space \(\mathbf{F}\): \[\prod_{\mathbf{F}, \mu} V \in \mathbf{F}\]

- If \(V \in \mathbf{F}\), the projection is itself: \[V \in \mathbf{F} \implies \prod_{\mathbf{F}, \mu} V = V\]

- The projection operator onto a subspace is a non-expansion: \[\|\prod_{\mathbf{F}, \mu} V_1 - \prod_{\mathbf{F}, \mu} V_2\|^2_{2, \mu} \leq \|V_1 - V_2\|^2_{2, \mu}\]

Population Version

We can define a loss function based on \(V = \prod_{\mathbf{F}, \mu} T^{\pi}V\):

\[\|V - \prod_{\mathbf{F}, \mu} T^{\pi}V\|^2_{2, \mu}\]

This is called Projected Bellman Error or Mean Square Projected Bellman Error.

We find the value function by solving the following optimization problem:

\[V = \underset{V \in \mathbf{F}}{\arg\min} \|V - \prod_{\mathbf{F}, \mu} T^{\pi}V\|^2_{2, \mu}\]

Since \(V \in \mathbf{F}\), the projection operator is linear:

\[\begin{aligned} V - \prod_{\mathbf{F}, \mu} T^{\pi}V &= \prod_{\mathbf{F}, \mu} V - \prod_{\mathbf{F}, \mu} T^{\pi}V\\ &= \prod_{\mathbf{F}, \mu} (V - T^{\pi}V)\\ &= - \prod_{\mathbf{F}, \mu} (T^{\pi}V - V)\\ &= - \prod_{\mathbf{F}, \mu} (BR(V)) \end{aligned}\]So the objective can be rewritten as:

\[V = \underset{V \in \mathbf{F}}{\arg\min} \|\prod_{\mathbf{F}, \mu} BR(V)\|^2_{2, \mu}\]

Which is the norm of the projection of the Bellman Residual onto \(\mathbf{F}\).

We can think of solving the projected bellman error objective as solving the two coupled optimization problems:

- Find the projection point given value function \(V^{\prime}\): \[V^{\prime\prime} = \underset{V^{\prime\prime} \in \mathbf{F}}{\arg\min} \|V^{\prime\prime} - T^{\pi}V^{\prime}\|^2_{2, \mu}\]

- Find the value function \(V^{\prime}\) on \(\mathbf{F}\) that is closest to the projection point \(V^{\prime\prime}\): \[V^{\prime} = \underset{V^{\prime} \in \mathbf{F}}{\arg\min} \|V^{\prime} - V^{\prime\prime}\|^2_{2, \mu}\]

Least Square Temporal Difference Learning (Population Version)

If \(\mathbf{F}\) is a linear FA with basis functions \(\phi_1, ...., \phi_p\):

\[\mathbf{F}: \{x \rightarrow \boldsymbol{\phi}(x)^T \mathbf{w}; \; \mathbf{w} \in \mathbb{R}^p\}\]

We can find a direct solution to the PBE objective, that is we want to find \(V \in \mathbf{F}\) s.t:

\[V = \prod_{\mathbf{F}, \mu} T^{\pi}V\]

We assume that:

- \(\chi\) is finite and \(|\chi| = N\), \(N \gg p\), each \(\boldsymbol{\phi}_i = [\phi_i(x_1) .... \phi_i(x_N)]^T\) is a \(N\)-dimentional vector.

Then, we define \(\Phi_{N\times p}\) as the matrix of concatenating all features:

\[\Phi = [\boldsymbol{\phi}_1 .... \boldsymbol{\phi}_p]_{N\times p}\]

The value function \(V \in \mathbf{F}\) is then:

\[V_{N \times 1} = \Phi_{N\times p} \mathbf{w}_{p \times 1}\]

Our goal is to find a weight vector \(\mathbf{w} \in \mathbb{R}^p\) such that:

\[V = \prod_{\mathbf{F}, \mu} T^{\pi}V \implies \Phi\mathbf{w} = \prod_{\mathbf{F}, \mu} T^{\pi}(\Phi \mathbf{w})\]

Let \(M = \text{diag}(\mu)\), since:

\[\|V\|^2_{2, \mu} = <V, V>_{\mu} = \sum_{x \in \chi} |V(x)|^2\mu(x) = V^TMV\]

\[<V_1, V_2>_{\mu} = \sum_{x \in \chi} V_1(x)V_2(x) = V^T_1 M V_2\]

Then, the projection operator onto the linear \(\mathbf{F}\) would be:

\[\begin{aligned} \prod_{\mathbf{F}, \mu} V &= \underset{V^{\prime} \in \mathbf{F}}{\arg\min}\|V^{\prime} - V\|^2_{2, \mu}\\ &= \underset{\mathbf{w} \in \mathbb{R}^p}{\arg\min}\|\Phi\mathbf{w} - V\|^2_{2, \mu}\\ &= \underset{\mathbf{w} \in \mathbb{R}^p}{\arg\min} (\Phi\mathbf{w} - V)^T M (\Phi\mathbf{w} - V) \end{aligned}\]By taking the derivative and setting it to zero (assuming that \(\Phi^TM\Phi\) is invertible):

\[\frac{\partial \prod_{\mathbf{F}, \mu} V}{\partial \mathbf{w}} = \Phi^TM(\Phi\mathbf{w} - V) = 0\] \[\implies \mathbf{w^{*}} = (\Phi^TM\Phi)^{-1}\Phi^TMV\]

Then the projection is:

\[\prod_{\mathbf{F}, \mu} V = \Phi \mathbf{w} = \Phi (\Phi^TM\Phi)^{-1}\Phi^TMV\]

Since \(T^{\pi} V = r^{\pi} (x) + \gamma \sum_{x \in \chi, a \in \mathbf{A}}P^{\pi}(x^{\prime} | x, a) \pi(a | s) V(x^{\prime})\), we can write it in vector form for all states:

\[(T^{\pi}V)_{N\times 1} = \mathbf{r}^{\pi}_{N \times 1} + \gamma P^{\pi}_{N\times N} V_{N \times 1}\] \[\implies (T^{\pi}\Phi\mathbf{w})_{N\times 1} = \mathbf{r}^{\pi}_{N \times 1} + \gamma P^{\pi}_{N\times N} \Phi_{N\times p}\mathbf{w}_{p \times 1}\]

Substitute the equation of \(T^{\pi}\Phi\mathbf{w}\) into \(V\) in the projection equation:

\[\prod_{\mathbf{F}, \mu} T^{\pi}\Phi\mathbf{w} = \Phi \mathbf{w} = \Phi (\Phi^TM\Phi)^{-1}\Phi^TM(T^{\pi}\Phi\mathbf{w})\] \[\implies \Phi \mathbf{w} = [\Phi (\Phi^TM\Phi)^{-1}\Phi^TM][\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w}]\]

Multiply both sides by \(\Phi^T M\) and simply:

\[\Phi^T M \Phi \mathbf{w} = [\Phi^T M\Phi (\Phi^TM\Phi)^{-1}\Phi^TM][\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w}]\] \[\implies \Phi^T M \Phi \mathbf{w} = \Phi^TM[\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w}]\] \[\implies \Phi^T M \Phi \mathbf{w} - \Phi^TM[\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w}] = 0\] \[\implies \Phi^T M[\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w} - \Phi \mathbf{w}] = 0\] \[\implies \mathbf{w}_{TD} = [\Phi^T M (\Phi - \gamma P^{\pi}\Phi)]^{-1}\Phi^T M \mathbf{r}^{\pi}\]

The solution \(\mathbf{w}_{TD}\) is called Least Square Temporal Difference Method (LSTD).

Notice that the term \(\mathbf{r}^{\pi} + \gamma P^{\pi} \Phi\mathbf{w} - \Phi \mathbf{w} = T^{\pi} V - V = BR(V)\). Hence:

\[\Phi^TM (BR(V)) = 0\] \[\implies <\mathbf{\phi}_i, BR(V)> = 0\]

Thus, LSTD finds a \(\mathbf{w}\) s.t the Bellman Residual is orthogonal to the basis of \(\mathbf{F}\) which is exactly what we want.

Batch Version

We have showed that the solution to \(V = \prod_{\mathbf{F}, \mu} T^{\pi}V\) with linear FA \(V = \Phi\mathbf{w}\) is:

\[\mathbf{w}_{TD} = A^{-1}_{p\times p} \mathbf{b}_{p \times 1}\]

Where:

- \(A^{-1} = \Phi^T M (\Phi - \gamma P^{\pi}\Phi)]^{-1}\)

- \(\mathbf{b} = \Phi^T M \mathbf{r}^{\pi}\)

Expand \(A\) and \(\mathbf{b}\) in terms of summations, we have:

\[A_{ij} = \sum^{N}_{n=1} \Phi^T_{in} \mu(n) (\Phi - \gamma P^{\pi}\Phi)_{ij}\] \[b_i = \sum^N_{n=1} \Phi^T_{in} \mu(n) r^{\pi}_n\]

Thus, in terms of current state \(x\) and next state \(x^{\prime}\):

\[A = \sum_{x \in \chi} \mu (x) \boldsymbol{\phi} (x) [\boldsymbol{\phi}(x) - \gamma \sum_{x^{\prime} \in \chi} P^{\pi}(x^{\prime} | x)\boldsymbol{\phi}(x^{\prime})]^T = E_{\mu}[\boldsymbol{\phi}(X) [\boldsymbol{\phi}(X) - \gamma E_{P^{\pi}}[\boldsymbol{\phi} (X^{\prime})]]^T]\]

\[\mathbf{b} = \sum_{x \in \chi} \mu(x) \boldsymbol{\phi} (x) r^{\pi}(x) = E_{\mu} [\boldsymbol{\phi}(X)r^{\pi}(X)]\]

Given data set \(D_n = \{X_i, R_i, X^{\prime}_i\}^{M}_{i=1}\) with \(X_i \sim \mu\), \(X^{\prime} \sim P^{\pi}(\cdot | X_i)\) and \(R_i \sim R^{\pi}(\cdot | X_i)\), we define the empirical estimator \(\hat{A}_n, \hat{ \mathbf{b}}_n\) as:

\[\hat{A}_n = \frac{1}{M} \sum^{M}_{i=1} \boldsymbol{\phi}(X_i) [\boldsymbol{\phi}(X_i) - \gamma \boldsymbol{\phi} (X^{\prime}_i)]^T\] \[\hat{\mathbf{b}}_n = \frac{1}{M} \sum^{M}_{i=1} \boldsymbol{\phi}(X)R_i\]

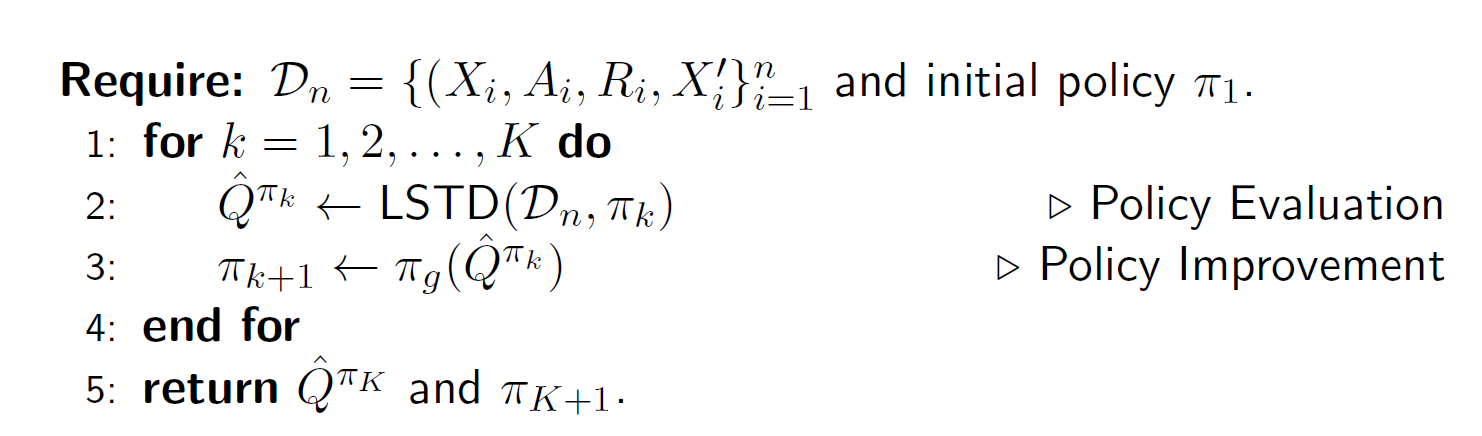

We can use LSTD to define an approximate policy iteration procedure to obtain a close to optimal policy (LSPI):

Semi-Gradient TD

Suppose that we know the true value function \(V^{\pi}\) and we want to find an approximation \(\hat{V}\), parameterized by \(\mathbf{w}\). The population loss:

\[V = \underset{\hat{V} \in \mathbf{F}}{\arg\min} \frac{1}{2}\|V^{\pi} - \hat{V}\|^2_{2, \mu}\]

Using samples \(X_t \sim \mu\), we can define an SGD procedure that updates \(\mathbf{w}_t\) as follows:

\[\begin{aligned} \mathbf{w}_{t+1} &\leftarrow \mathbf{w}_t - \alpha_t \nabla_{\mathbf{w}_t} [\frac{1}{2} |V^{\pi} (X_t) - \hat{V}(X_t; \mathbf{w}_t)|^2]\\ &= \mathbf{w}_t + \alpha_t (V^{\pi} (X_t) - \hat{V}(X_t; \mathbf{w}_t))\nabla_{\mathbf{w}_t} \hat{V}(X_t; \mathbf{w}_t) \end{aligned}\]If we select proper step size \(\alpha_t\), then the SGD converges to the stationary point.

When we do not know \(V^{\pi}\), we can use bootstrapped estimate (TD estimate \(\hat{T}^{\pi}V_t\)) instead:

\[\mathbf{w}_{t+1} \leftarrow \mathbf{w}_t + \alpha_t (\hat{T}^{\pi}V_t - \hat{V}(X_t; \mathbf{w}_t))\nabla_{\mathbf{w}_t} \hat{V}(X_t; \mathbf{w}_t)\] \[\mathbf{w}_{t+1} \leftarrow \mathbf{w}_t + \alpha_t (R_t + \hat{V}(X^{\prime}_t; \mathbf{w}_t) - \hat{V}(X_t; \mathbf{w}_t))\nabla_{\mathbf{w}_t} \hat{V}(X_t; \mathbf{w}_t)\]

The substitution of \(V^{\pi}(X_t)\) with \(\hat{T}^{\pi}V_t (X_t)\) does not follow from the SGD of any loss function. The TD update is note a true SGD update, that is, we call it a semi-gradient update.