Basic Adaptive LR Algorithms

Algorithms with Adaptive Learning Rates

Learning rate is reliably one of the hyperparameters that is the most difficult to set because it has a significant impact on model performance. At each iteration, the cost is often highly sensitive to some directions in parameter space and insensitive to others. Momentum solves some of the problems in the cost of introducing another hyperparameter. Thus, it is natural to consider algorithms that have separate learning rate for each parameter and automatically adapt learning rates for each of the parameter.

The delta-bar-delta algorithm (base on full-batch) is an early heuristic approach to adapting individual learning rates for model parameters during training, it is based on intuitive idea similar to momentum:

If the partial derivative of the loss, with respect to a given model parameter, remains the same sign, then the learning rate should increase, if the partial derivative with respect to the parameter changes sign, then the learning rate should decrease.

We would like to extend the idea to mini-batch scenario.

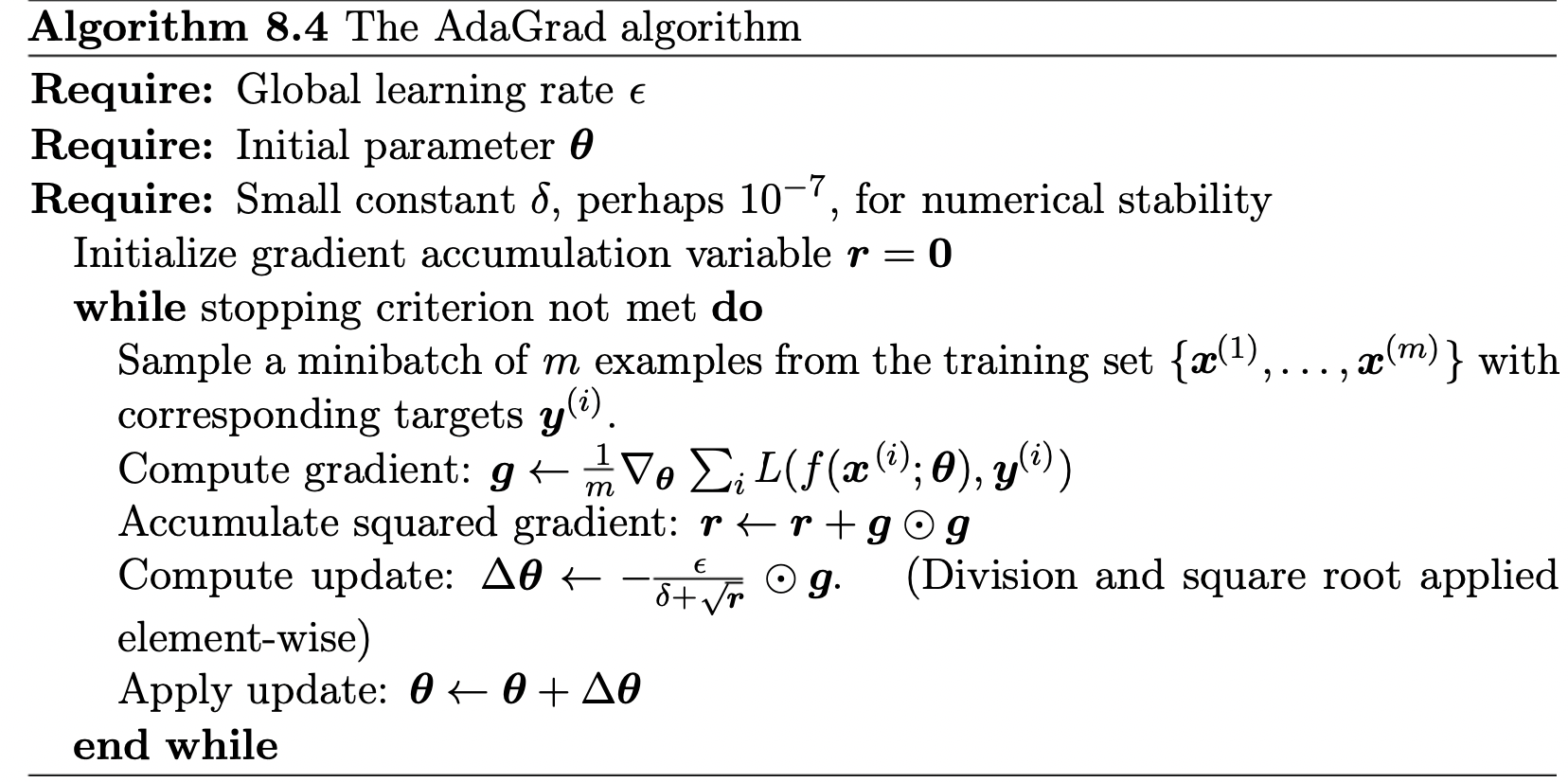

AdaGrad

The AdaGrad algorithm, individually adapts the learning rates of all model parameters by scaling them inversely proportional to square root of the sum of all of their historical squared values:

If \(g_1\) is large constantly larger than \(g_2\), then:

\[\sqrt{r_1} > \sqrt{r_2} \implies \epsilon_1 < \epsilon_2\]

This makes sense because we want to take a small step in the gradient direction when the magnitude of gradient is large, especially when we have noisy gradient. Conversely, we would like to take slightly larger step than large gradient case when we have smaller gradient.

If \(r_i\) is less than 1, we have increasing learning rate compare to base learning rate:

\[r_i < 1 \implies \epsilon_i > \epsilon\]

This helps us to get out of the local minimum or flat region of the surface by taking larger steps.

AdaGrad is designed to converge rapidly when applied to a convex optimization problem, so when it finds a convex structure, it can converge rapidly.

However, in non-convex optimization problem (training neural networks) the accumulated gradient \(\mathbf{r}\) starts accumulating at the beginning, this will introduce excessive decrease in the effective learning in later training steps (at end, we will have large \(\mathbf{r}\), so the learning rates will be small to prevent effective learning in later stages or early large gradients will prevent learning in early stages).

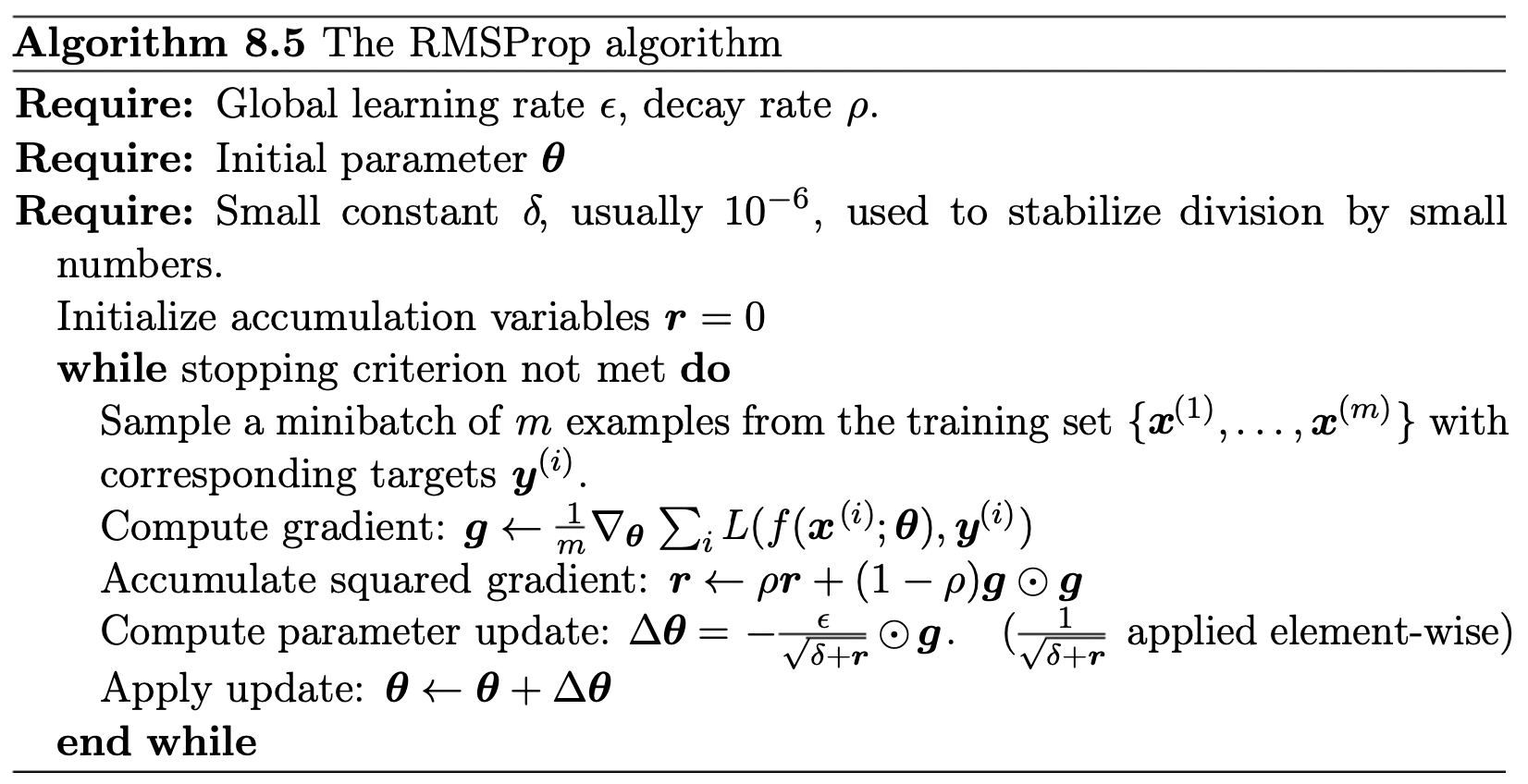

RMSProp

The RMSProp algorithm modifies AdaGrad so that it can perform better in non-convex setting by changing the gradient accumulation into an exponentially weighted moving average, so the early accumulation becomes less and less important. This structure helps in non-convex problem by discarding the history from extreme past so when we arrive at a convex bowl, we have sufficiently large learning rate to converge rapidly.

The algorithm introduces a new parameter \(\rho\) that controls for the weight of accumulated gradient.